NVIDIA Triton Server flaws pose a severe threat. A new set of critical security vulnerabilities affects NVIDIA’s Triton Inference Server for Windows. These flaws allow unauthenticated attackers to execute arbitrary code. This creates a significant risk for artificial intelligence (AI) systems. Cloud security giant Wiz discovered these issues. Their findings highlight growing security challenges in AI infrastructure.

Here are the key takeaways from these alarming discoveries:

- Critical NVIDIA Triton Server flaws impact Windows deployments.

- Attackers can achieve unauthenticated remote code execution.

- Wiz, a cloud security firm, identified and disclosed these issues.

- Deployed AI models and systems face substantial risks.

- This highlights the urgent need for robust AI security.

Table of Contents

Discovery of NVIDIA Triton Server Flaws

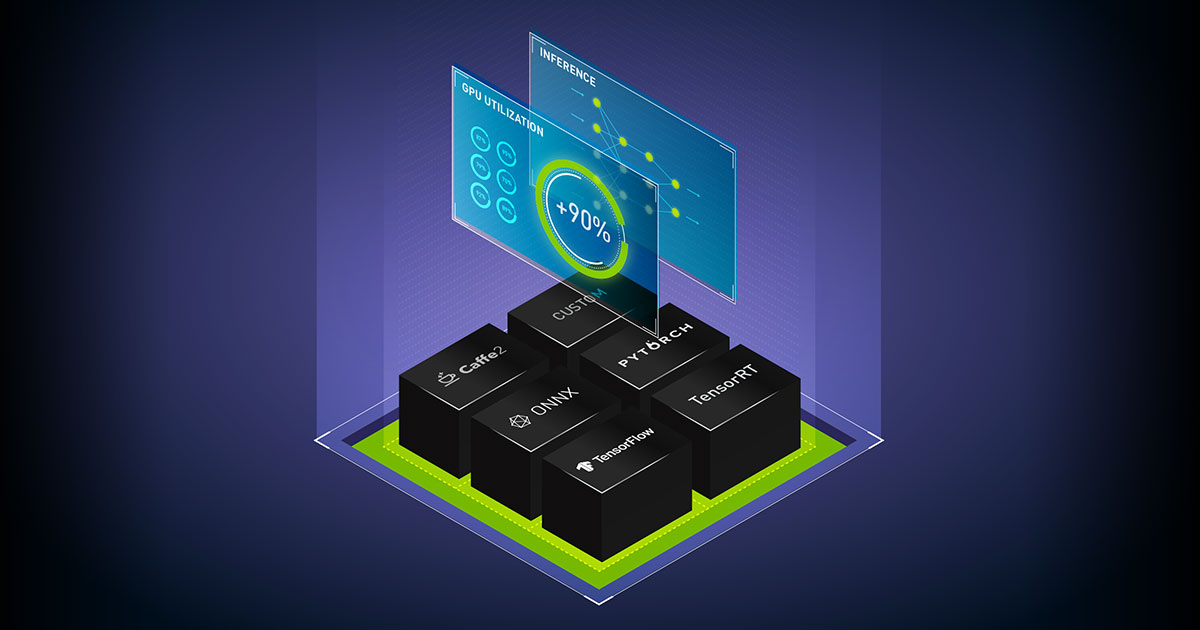

The security community recently learned about significant issues. These NVIDIA Triton Server flaws affect a widely used open-source software. Triton helps developers deploy AI models from frameworks like TensorFlow and PyTorch. Researchers at Wiz publicly disclosed these vulnerabilities on August 4, 2025. Wiz is a prominent cloud security firm. They are known for deep insights into cloud native and AI security risks.

The main concern is potential unauthenticated code execution. Attackers could gain control over a vulnerable Triton Inference Server. No prior authentication credentials are needed. This capability is highly sought by malicious actors. It provides a direct path to compromise the system. Attackers can then run their own commands and take over the server. Cloud security firm Wiz detailed these findings in their recent research.

Specific technical details are not fully elaborated. However, the collective impact signals a serious security lapse. These flaws impact the Windows version of the Triton Inference Server. This narrows the immediate scope. Yet, it does not diminish severity for affected organizations.

Critical Risks Posed by Triton Server Flaws

NVIDIA Triton Inference Server is a foundational AI component. It bridges trained AI models with consuming applications. Its function is to optimize and serve AI models efficiently. It handles concurrent requests and maximizes throughput. This applies to various AI applications. These include image recognition, natural language processing, and predictive analytics.

Compromising an inference server through unauthenticated code execution poses severe, multifaceted risks:

- Data Exfiltration: Attackers might access sensitive data. This includes proprietary business data or personal identifiable information (PII).

- Intellectual Property Theft: AI models themselves could be stolen. This exposes trade secrets and unique model architectures.

- Model Manipulation and Sabotage: Attackers can tamper with AI models. This may lead to incorrect predictions or denial-of-service attacks.

- Lateral Movement: A compromised server becomes a beachhead. Attackers can move laterally within the network.

- Supply Chain Attacks: Impact could cascade to downstream applications. This affects customers relying on the inferences.

- Reputational Damage: Organizations face severe reputational harm. This includes loss of customer trust and regulatory fines.

Wiz’s disclosure highlights an evolving AI threat landscape. AI systems are now critical to operations and infrastructure. Securing components like inference servers is paramount. A single vulnerability can cause widespread impact across AI initiatives.

Addressing AI Security Challenges from Triton Server Flaws

These flaws in NVIDIA Triton highlight an urgent need. A proactive approach to AI security is essential. NVIDIA will likely release patches and advisories. However, the industry must recognize continuous threats. This includes threats like the discovered NVIDIA Triton Server flaws.

Organizations deploying AI models should prioritize key security measures:

- Prompt Patching: Apply security patches from vendors immediately. Updated software is your first defense.

- Network Segmentation: Isolate AI inference servers. Limit their access to other critical systems.

- Least Privilege Access: Implement strict access controls. Only authorized personnel should access servers.

- Continuous Monitoring: Deploy robust logging and monitoring. Detect unusual activities and unauthorized attempts.

- Security Audits: Regularly conduct audits and penetration tests. Identify weaknesses before exploitation.

- Secure Configuration: Follow best practices for Triton Server setup. Disable unnecessary services and strengthen defaults.

Collaboration between researchers and vendors is crucial. Early detection and responsible disclosure help. Vendors can develop fixes quickly. This prevents widespread exploitation of vulnerabilities.

Future of AI Security Amidst Triton Server Flaws

AI adoption is accelerating across all industries. Focus on securing the entire AI lifecycle will intensify. This includes data ingestion, training, deployment, and inference. Vulnerabilities in components like the NVIDIA Triton Inference Server are potent reminders. AI security is not an afterthought. It is a fundamental requirement for resilient AI systems.

The security community and practitioners must remain vigilant. Insights from firms like Wiz are invaluable. They help identify and mitigate risks. This prevents undermining AI’s immense potential. It is a shared responsibility. Rapid AI advancements need equally robust security measures.

Frequently Asked Questions About NVIDIA Triton Server Flaws

What are the NVIDIA Triton Server flaws?

The NVIDIA Triton Server flaws are a set of critical security vulnerabilities affecting the Windows version of the inference server. They enable unauthenticated attackers to execute arbitrary code remotely.

Who discovered these critical vulnerabilities?

These critical vulnerabilities were discovered and publicly disclosed by researchers at Wiz, a prominent cloud security firm specializing in cloud native and AI security risks.

How can organizations protect against these AI security risks?

Organizations should apply prompt security patches, implement network segmentation, use least privilege access, deploy continuous monitoring, conduct regular security audits, and follow secure configuration best practices for their AI inference servers.

- Top 6 Best Property Management Accounting Platforms For Small Landlords

- 12 Surprising AI Tools That Actually Make You Money (or Save You Time)

- Sam Altman Career Advice 2025

- AI frontrunners bottlenecks: 3 Alarming Challenges Facing Tech Leaders

- Beginner’s guide to automating business processes with AI