The promise of multi-agent AI systems, where a team of specialized agents collaborates to solve complex problems, is alluring. Frameworks like OpenAI’s Swarm and Microsoft’s Autogen encourage this approach. However, in practice, this complexity often leads to a significant drop in reliability. The very frameworks designed to empower developers are leading them into a trap of building fragile, inconsistent, and ultimately ineffective systems.

This article, guided by insights from experts at Cognition AI (the creators of Devin), will dismantle the multi-agent hype. We will explore the two fundamental principles you must follow to build reliable agents. You will learn why the most common multi-agent architectures fail and see a simple, effective structure that works—plus an advanced version for long-duration tasks. The primary keyword, multi-agent AI systems, is a core concept we will explore throughout.

Table of Contents

The Dangerous Allure of Multi-Agent Frameworks

A year ago, the AI space was buzzing with hype around frameworks designed to facilitate multi-agent AI systems. The idea of creating a “team” of AI agents—a planner, a coder, a critic—working in parallel sounds powerful.

However, many developers who have invested hundreds of hours into this approach have found that the more complexity and agents you introduce, the lower the reliability becomes. This isn’t a niche observation; it’s a critical flaw noted by top AI labs like Cognition and Anthropic. The simplest examples in these frameworks often use multiple agents for a simple task, which is a huge mistake.

Two Unbreakable Principles for Reliable AI Agents

Walden, a co-founder of Cognition AI, argues that most failures in multi-agent AI systems stem from violating two core principles. Adhering to these is so critical that any architecture that breaks them should be avoided by default.

- Principle 1: Share Full Context. Agents must have access to the full history of the task, including all prior actions, decisions, and reasoning. Not just the final output of the previous step.

- Principle 2: Actions Carry Implicit Decisions. Every action an agent takes is based on an implicit decision. If agents operate on different, uncommunicated decisions, their actions will conflict, leading to bad results.

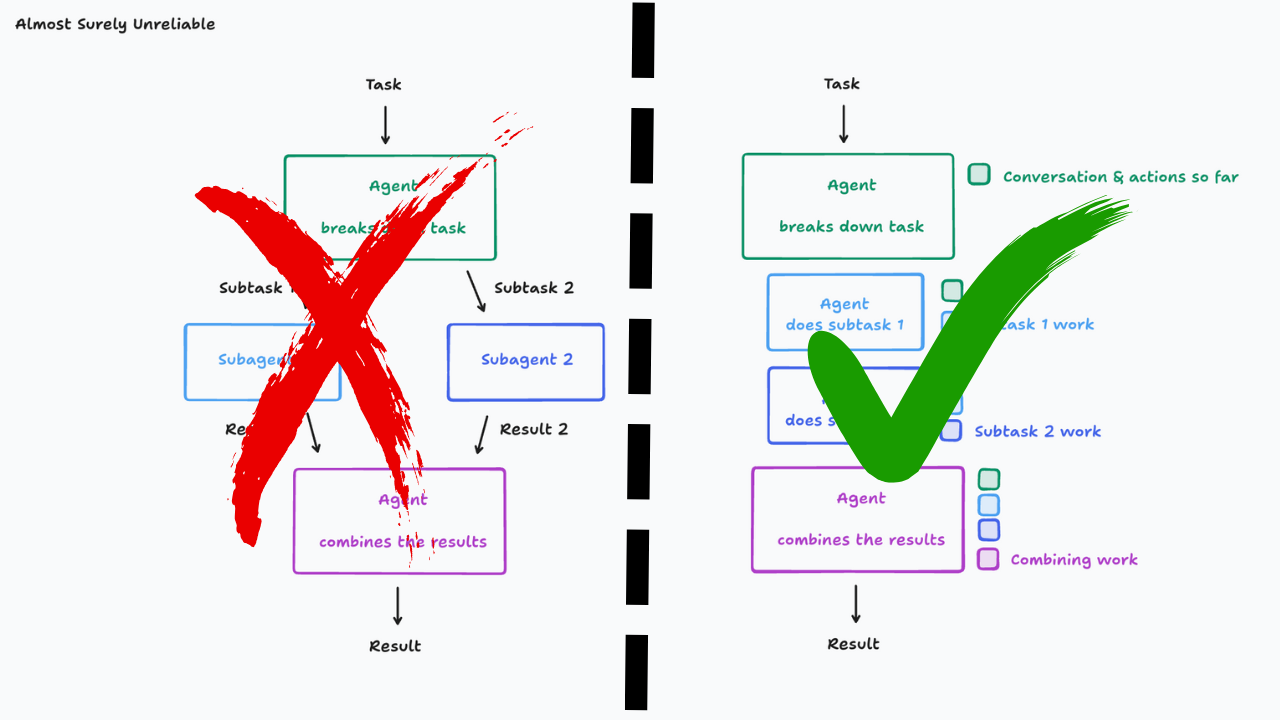

Flawed Architecture: The Parallel Processing Problem

The most common and tempting multi-agent architecture is also the most fragile.

In this setup, a main agent breaks a task into subtasks and delegates them to multiple sub-agents that run in parallel. These sub-agents work simultaneously and do not communicate with each other. They then send their individual results back to the main agent to be combined.

Why It Fails

This almost never works in production. Since the agents run in parallel and do not share context about what the others are doing, their work is often inconsistent.

For example, imagine a task to “design a new mobile game.”

- Sub-agent 1 (Story) might create a storyline for a vibrant, futuristic city.

- Sub-agent 2 (Visuals), working in isolation, might create a visual style with dark, gritty tones for a horror game.

The final agent is left with the impossible job of combining two completely misaligned outputs. This happens because the agents’ actions were based on conflicting implicit decisions.

The Proven Architecture: A Simple, Linear, and Reliable Agent

The simplest and most reliable way to follow the core principles is to use a single-threaded, linear agent.

Instead of running in parallel, the main agent calls sub-agents sequentially.

- A task comes in. The main agent calls Sub-agent 1, giving it the full context.

- Sub-agent 1 completes its work.

- The main agent then calls Sub-agent 2, giving it the original context PLUS the complete output and reasoning from Sub-agent 1.

Why It Works

This is the key to reliability. Sub-agent 2 has complete awareness of the situation, including the decisions and actions made by Sub-agent 1. This ensures all work is consistent and builds upon what came before. Using the same game design task, the visual agent would see the futuristic city concept and create a matching art style.

For most use cases, this simple and reliable architecture is all you need.

Advanced Architecture: Managing Large-Scale Tasks with Context Compression

A potential issue with the simple linear model is that for very large tasks (e.g., analyzing a massive codebase with dozens of files), the context window can overflow.

For these truly long-duration tasks, a more advanced (and harder to implement) architecture can be used: a linear agent with context compression.

The process is still sequential, but with an added step:

- Before passing the history to the next agent, a compressor agent (a dedicated LLM) summarizes the previous conversation and actions.

- The next agent receives this compressed context, which contains only the most essential information and key decisions.

This allows the system to handle much more complex, longer-running tasks without hitting context limits. However, this adds a new layer of complexity and is only worth it if you are consistently overflowing the context window.

Why Simulating Human Collaboration Fails in AI

A common counterargument is: “Why not just have the agents talk to each other to resolve conflicts?” This is what humans do.

The problem is that agents are not humans. They lack the nuanced intelligence and proactive discourse skills required for effective negotiation. Forcing agents to “collaborate” in this way often results in more fragility, not less. Even top models from companies like

Anthropic and OpenAI are not designed for this. Anthropic’s own approach with Claude shows that sub-agents are typically used for narrow, investigative tasks, with the main agent always staying in control of writing code and taking primary action.

The Road Ahead for Building Agents

The conclusion is clear: the most effective way to build reliable AI agents today is to keep the architecture simple. Avoid the trap of complex, parallel multi-agent systems. Instead, build upon a single-threaded, linear architecture where the full context is shared sequentially.

This approach ensures that every action is informed by all relevant decisions made previously, leading to consistent, predictable, and reliable results.

What are your thoughts on this approach to building AI agents? Have you experienced the failures of parallel systems firsthand? Let us know in the comments.